Category: Month: June 2018

Fig. 1: Integrated design and simulation in Energy3DIn workplaces, engineering design is supported by contemporary computer-aided design (CAD) tools capable of virtual prototyping — a full-cycle process to explore the structure, function, and cost of a…

As a software tester at the Concord Consortium, Evangeline Ireland sleuths for bugs in our projects. She ferrets out the source of known glitches (why does hitting the spacebar repeatedly create an error in Geniventure?) and discovers problems before software is released. “If it’s going to be used by teachers without the researchers there to […]

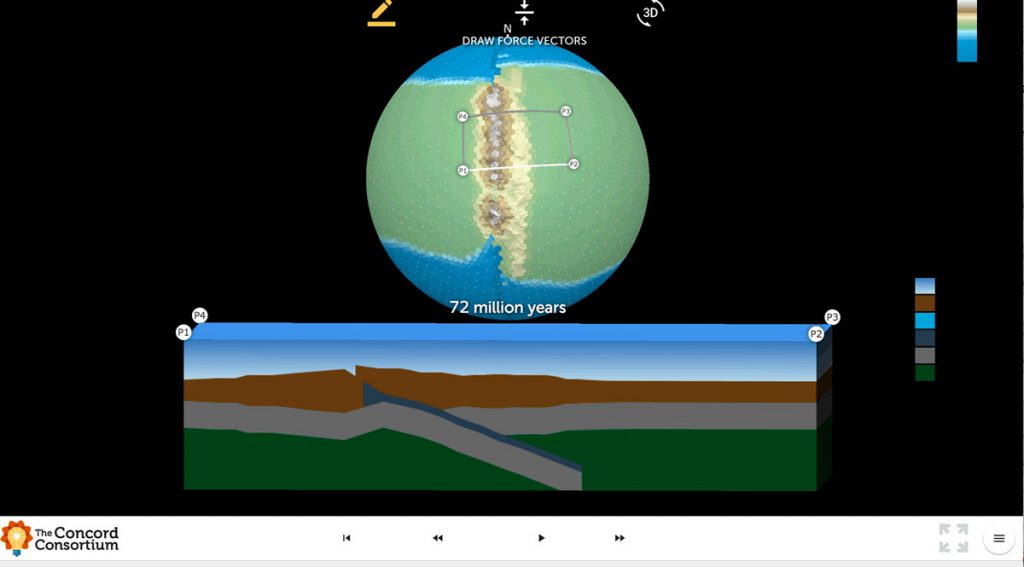

We are excited to introduce the *beta version* of Tectonic Explorer, our newest Earth system model, developed by our GEODE project. Tectonic Explorer features a complex system of interacting tectonic plates around an entire planet — in this case a simplified, Earth-like planet. For the first time in K-12 education, students will be able to […]

The Concord Consortium is thrilled to announce a new initiative to transform STEM teaching and learning and reach more students with educational technology. By applying current and future technologies in unique ways, generating new collaborations, and leveraging the power of open educational resources, a group of innovative thought leaders is working to revolutionize STEM learning […]

Eighteen states and the District of Columbia, representing more than a third of the U.S. student population, have adopted the Next Generation Science Standards (NGSS) since their release in 2013, and more are expected to follow. To make the most of NGSS, teachers need three-dimensional assessments that integrate disciplinary core ideas, crosscutting concepts, and science […]

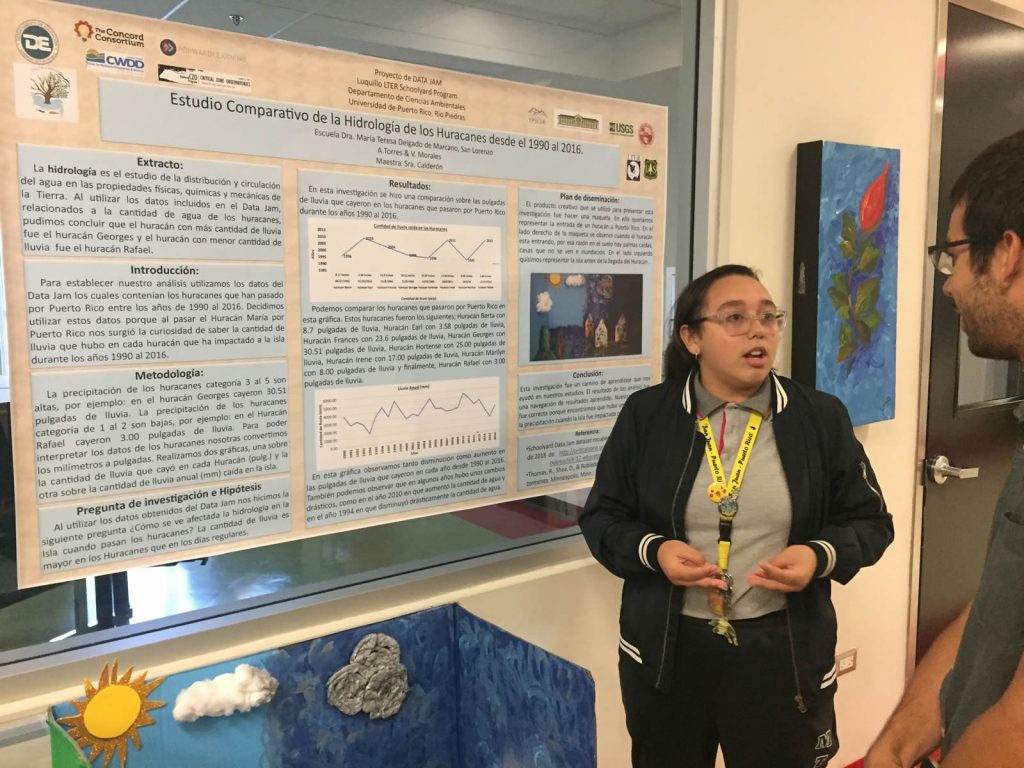

Students in the Luquillo Schoolyard Project in Puerto Rico are jamming on data. Large, long-term environmental data! And our free, online tool CODAP (Common Online Data Analysis Platform) joined their Data Jam to help students visualize and explore data in an inquiry-oriented way. El Yunque National Forest, the only tropical rainforest in the U.S. National […]