Category: Author: Trudi Lord

Last summer, thick plumes of wildfire smoke from northern Canada blanketed cities as far south as Washington, D.C., in an eerie orange haze. Record-breaking wildfires in provinces across Canada resulted in catastrophic damage to homes and communities, the destruction of more than 45.7 million acres of forest, and dangerously unhealthy air quality throughout the Northeast […]

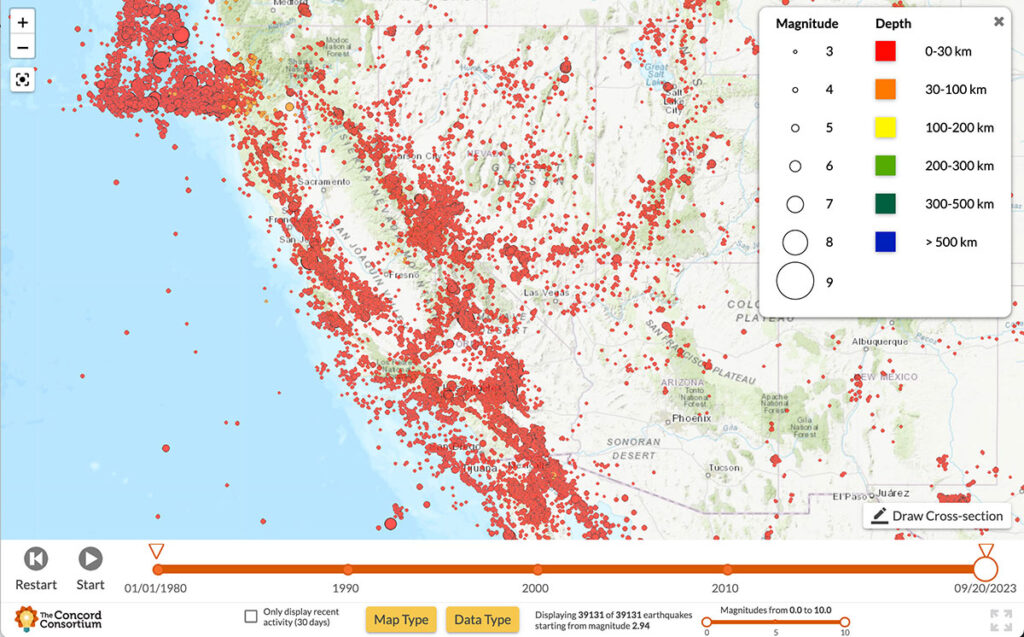

With most California residents living within a 30-minute drive of one of the state’s 500 active fault zones, the threat of earthquakes looms large. Scientists are constantly monitoring seismic activity, conducting risk assessments to determine when and where earthquakes may occur, and predicting the potential impacts to surrounding communities. Our new National Science Foundation-funded YouthQuake […]

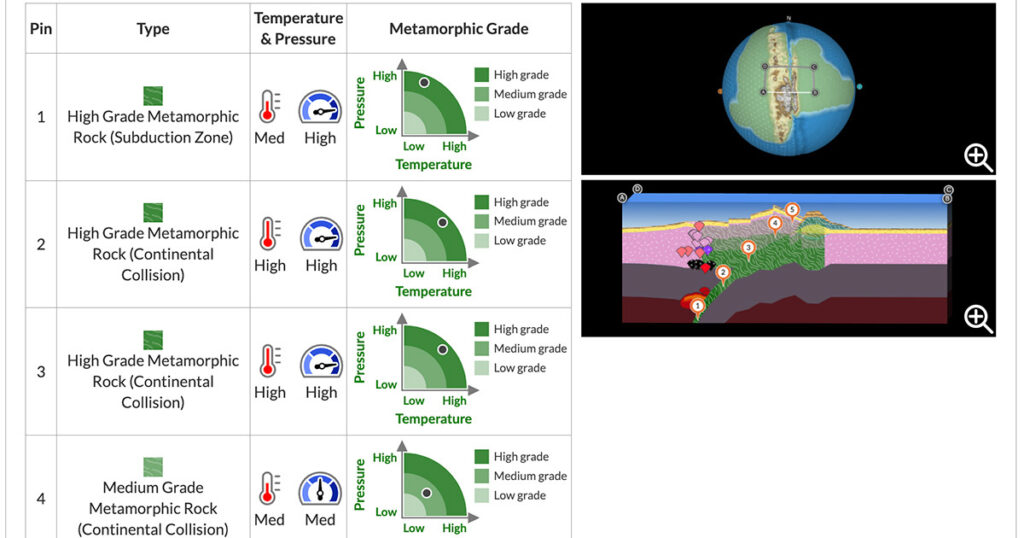

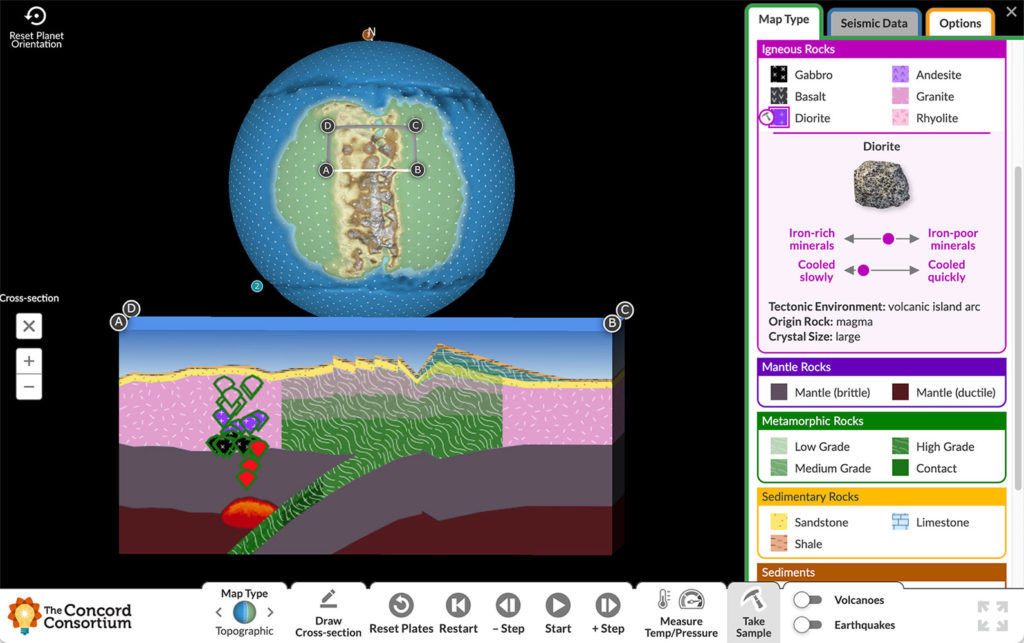

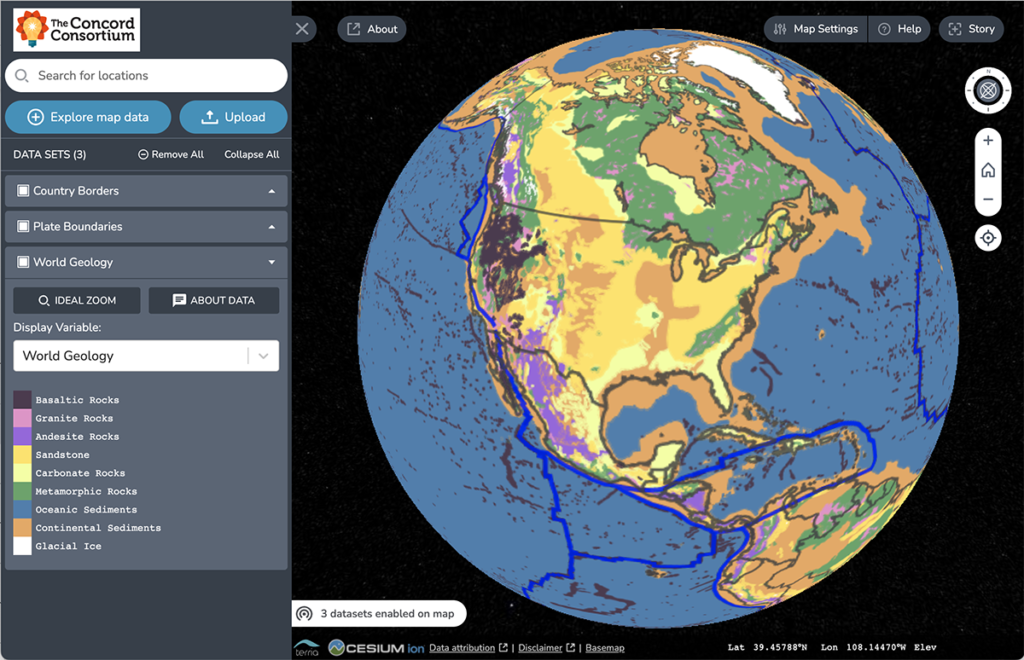

Across the Earth, rock is being created, destroyed, and transformed all the time. If you were to witness a volcanic eruption up close, you would see the birth of new rock. While such an eruption results in a dramatic display of Earth’s power, many rock-forming processes are invisible as they take place deep beneath Earth’s […]

Ambitious Science Teaching now includes a focus on equity and reaching diverse student populations. One principle of the Critical and Cultural Approaches to Ambitious Science Teaching (C2-AST) framework (Thompson et al., 2021) is to situate learning around phenomena that prioritizes students’ communities and cultures, their local environment, and daily experience. While this can be a […]

While our main offices are located in Concord, Massachusetts, and El Cerrito, California, nine of our 45 employees call other states home. Like many companies, we began working remotely during the pandemic and most of us continue to do so much of the time. But as an organization dedicated to innovating and inspiring equitable, large-scale […]

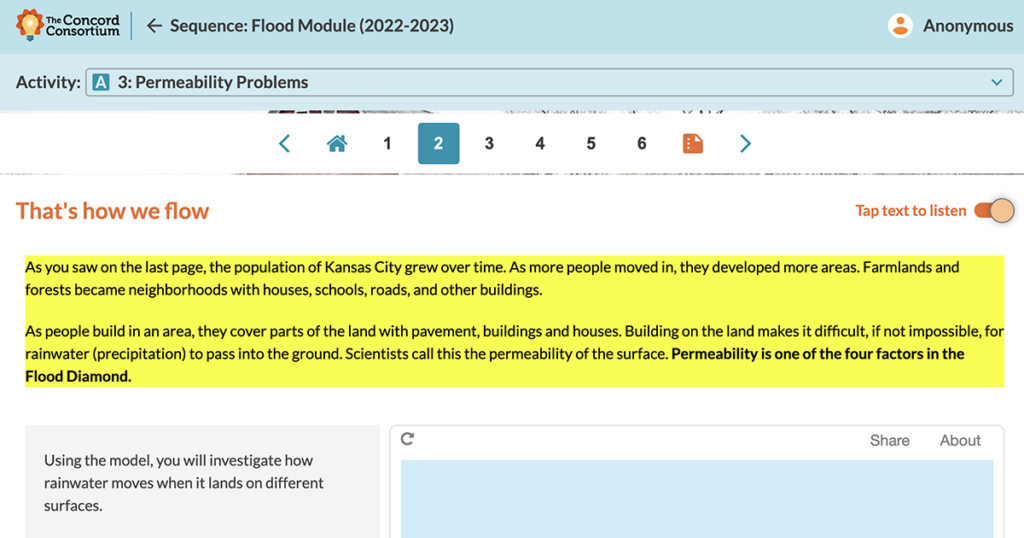

Teachers have used our interactive online activities for many years with great success. However, the same request has come up time and time again: “Is there any way that students can have the page read out loud?” Until recently, this could only be done with browser plug-ins that were complicated to install on students’ computers […]

Traditional geologic maps beautifully illustrate the many different types of rock found on Earth’s surface. Geoscientists can look at a colorful geologic map and immediately spot important pieces of the story of Earth’s geologic history. For instance, in the map below, the red area found in Canada represents bedrock formed in the Late Archean Era […]

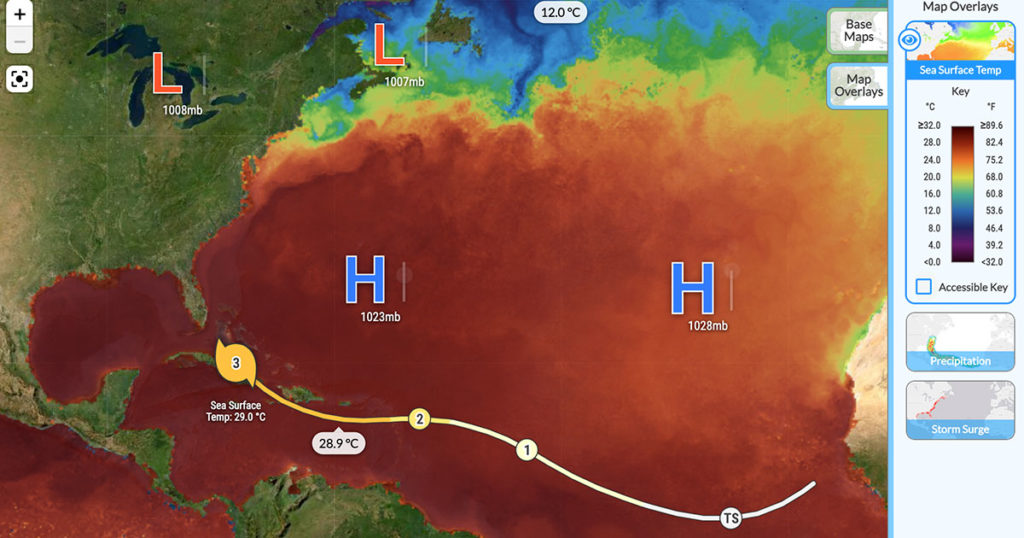

It used to be that natural disasters like wildfires, floods, and hurricanes each had their own season, likely to occur in predictable locations and at certain times of the year. Changes in the climate have expanded and shifted both the map of where people may be at risk and the months when these hazards most […]

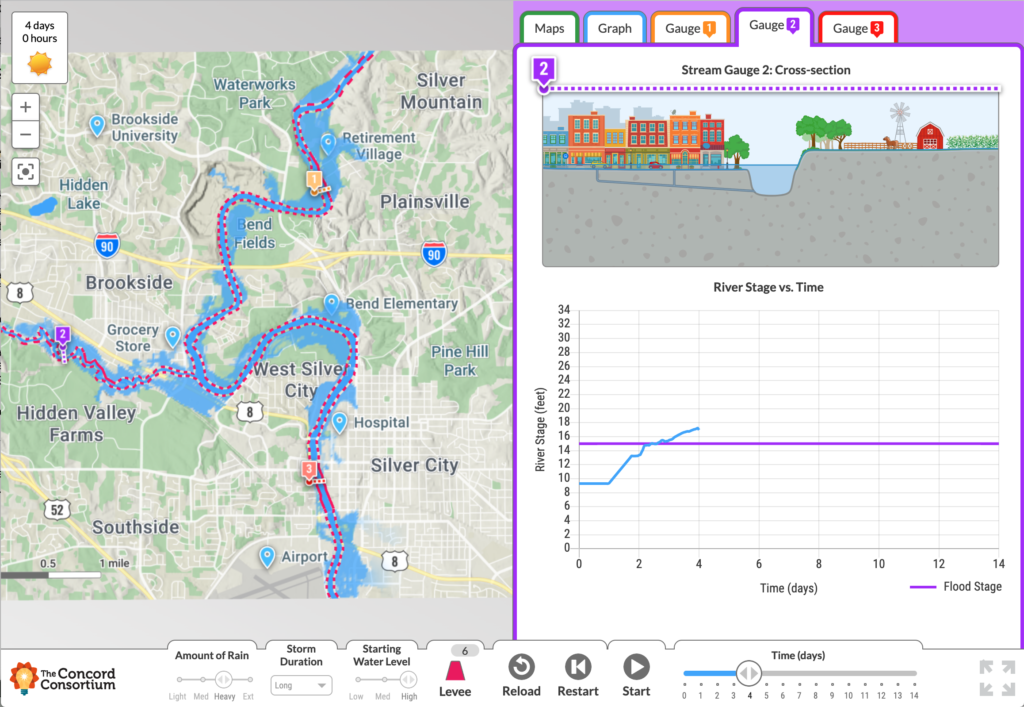

In the last 30 years, the risk of inland flooding in the United States has increased dramatically. Extreme rainfall events have become more frequent, causing widespread flooding and water damage. The costs to repair, rebuild, and remediate flooding have grown each year. Flooding in the Midwest in 2019 alone affected 14 million people and came […]

Climate change, and the rise of the natural hazards that climate change brings, has been at the top of news feeds every week over the past year. Extreme events such as floods, droughts, and wildfires are expected to increase in the future. What does that mean for those of us living in the path of […]