Social media has been exploding with New Year’s resolutions since early fall. If you’d like to get a head start on your own educational resolutions for the next calendar year, we’ve got you covered. Want to help students see Earth science as a lab science? Add more data science activities to your high school classes? […]

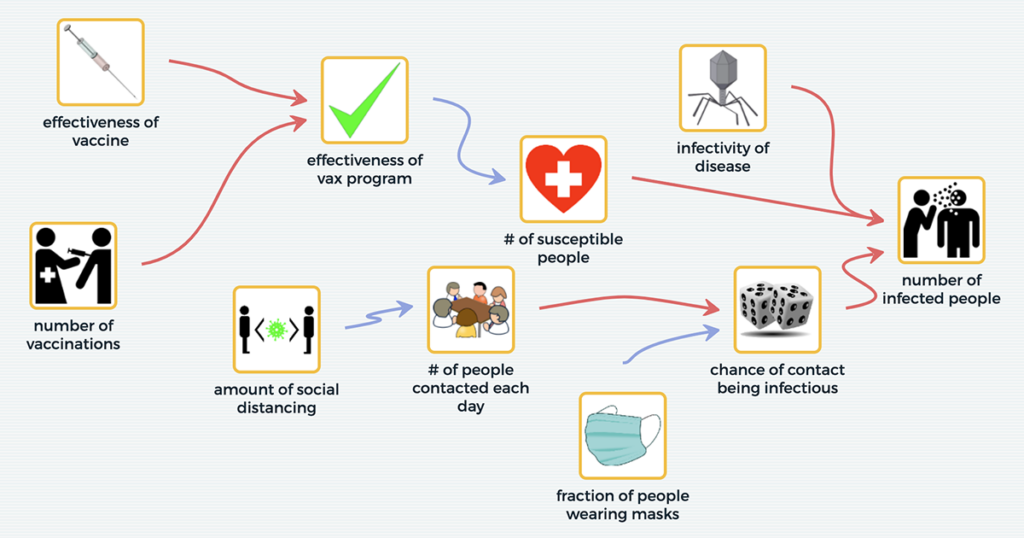

From local environmental justice issues to global phenomena such as climate change, complex problems often require systems thinking to address them. Since 2018, the National Science Foundation-funded Multilevel Computational Modeling project, a collaboration between the Concord Consortium and the CREATE for STEM Institute at Michigan State University, has researched how the use of our SageModeler […]

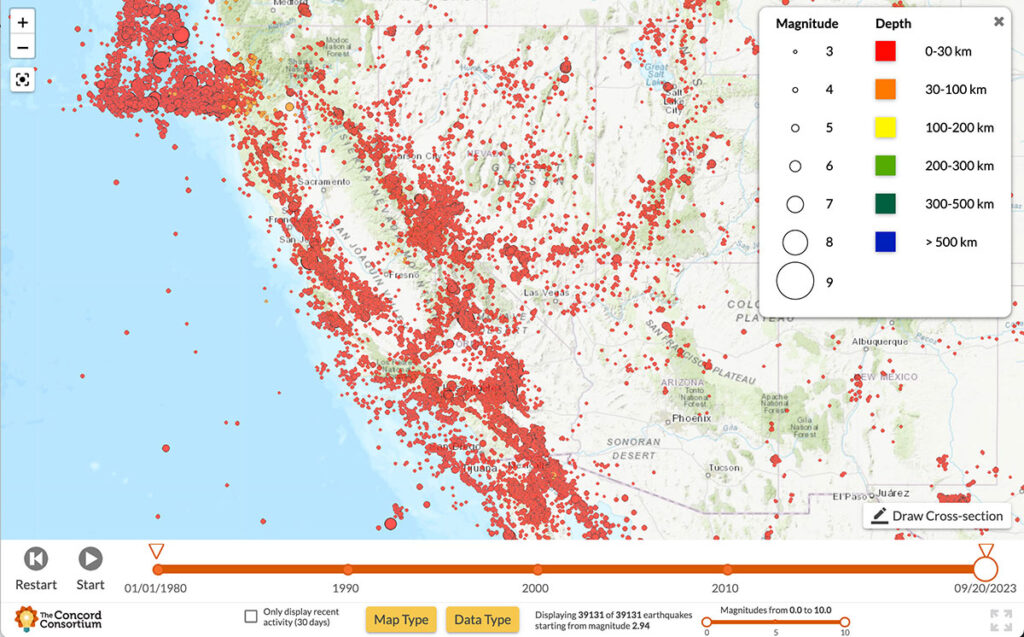

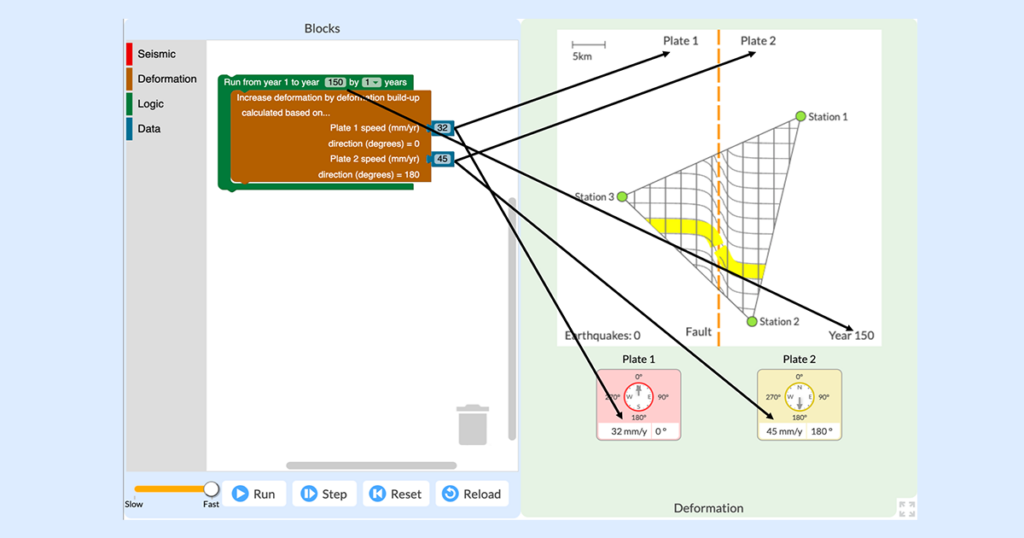

With most California residents living within a 30-minute drive of one of the state’s 500 active fault zones, the threat of earthquakes looms large. Scientists are constantly monitoring seismic activity, conducting risk assessments to determine when and where earthquakes may occur, and predicting the potential impacts to surrounding communities. Our new National Science Foundation-funded YouthQuake […]

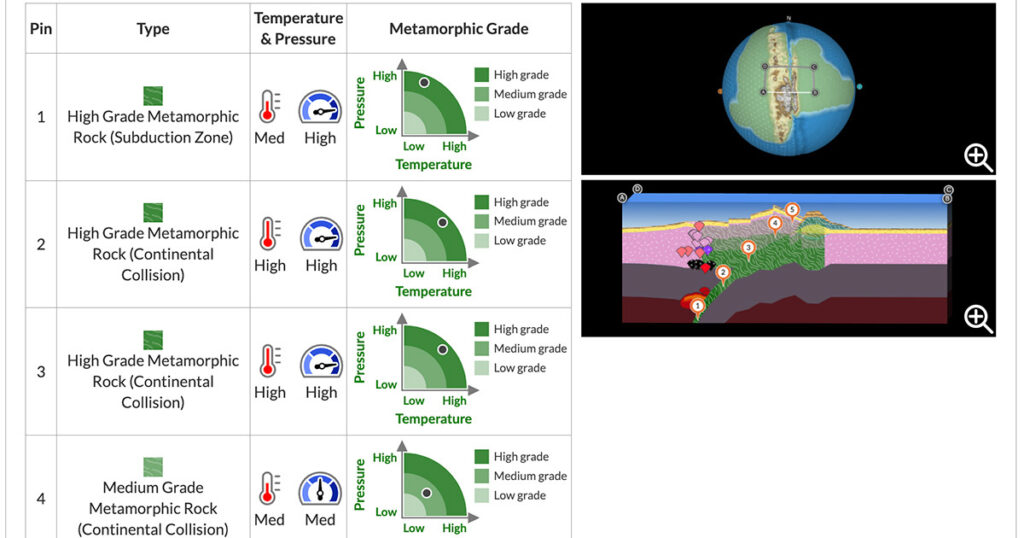

Stephanie Seevers is an Earth science teacher in Colorado and a consultant on the TecRocks project. I was talking to my 9th grade Earth and Space Science students recently about why they think so many people lack a solid understanding of our planet and its history. We brainstormed ideas, and while several theories sounded valid, […]

Across the Earth, rock is being created, destroyed, and transformed all the time. If you were to witness a volcanic eruption up close, you would see the birth of new rock. While such an eruption results in a dramatic display of Earth’s power, many rock-forming processes are invisible as they take place deep beneath Earth’s […]

Every education project funded by the National Science Foundation (NSF) is subject to flexible yet high expectations for the proposed research. NSF is investing in our ability to develop new technology, new curriculum, and new research that contributes innovative ideas and products to further the field of STEM education. When we are awarded an NSF […]

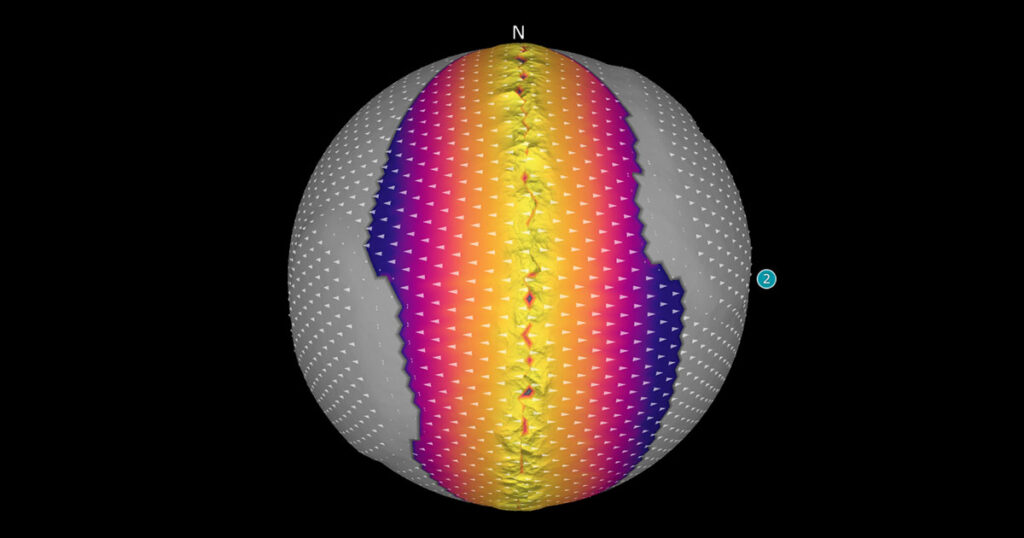

The everyday work of modern seismologists—the scientists who study earthquakes, hazards, and risks—exists right at the intersection of two NGSS practices: “Analyzing and Interpreting Data” and “Using Mathematics and Computational Thinking.” Seismologists collect huge amounts of data from satellites, remote sensors, and GPS networks in order to monitor Earth’s surface for signs of land movement […]

Moths live around the world. Despite their ubiquity, they haven’t been studied nearly as much as their daytime counterparts, not even by entomologists. Butterflies have gotten most of the attention. The MothEd: Authentic Science for Elementary and Middle School Students project is exploring ways to deepen independent science inquiry learning in young learners using technology […]

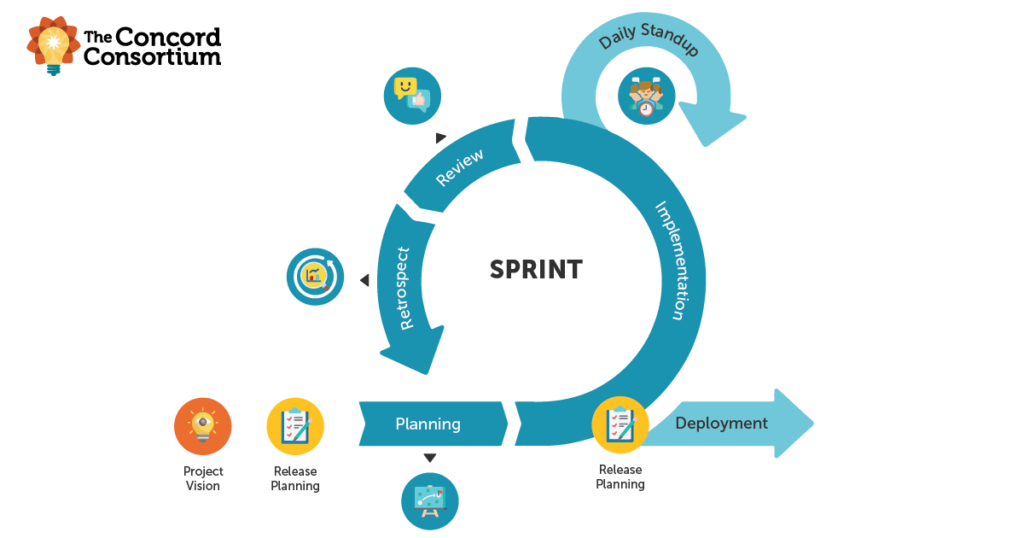

Science teacher to Scrum Master may not seem like a natural transition. Indeed, my friends and family wondered about my recent career switch to Scrum Master at the Concord Consortium. Let me explain how I got here. According to Scrum Alliance®, a Scrum Master is “the Scrum team member tasked with fostering an effective and […]

As an organization dedicated to innovating and inspiring equitable, large-scale improvements in STEM teaching and learning through technology, we develop a lot of technology. I mean a lot. Our Scrum approach to technology development is always evolving. We adapt to each research project, team, person, and circumstance to be as efficient and effective as possible. […]